DHH Cyber Community

The goal of the Deaf and Hard of Hearing Cyber-Community in the STEM (DHH Cyber-Community) project is to use existing cyber infrastructure connections between universities to advance deaf and hard of hearing students in science, technology, engineering, and mathematics (STEM).

MobileASL

MobileASL is a video compression project at the University of Washington with the goal of making wireless cell phone communication through sign language a reality in the U.S.

Tactile Graphics Project

The Tactile Graphics Project aims to increase universal benefit from graphical images (i.e., line graphs, bar charts, illustrations, etc.). It is a multidisciplinary project with researchers and practitioners from UW’s Department of Computer Science and Engineering, Access Technology Lab, and DO-IT. Our goal is to enable K-12, college, undergrad, and graduates students who are blind to have full access to mathematics, engineering, and science.

WebInSight

The WebInSight Project includes is a collection of projects designed to make the web more accessible to blind web users.

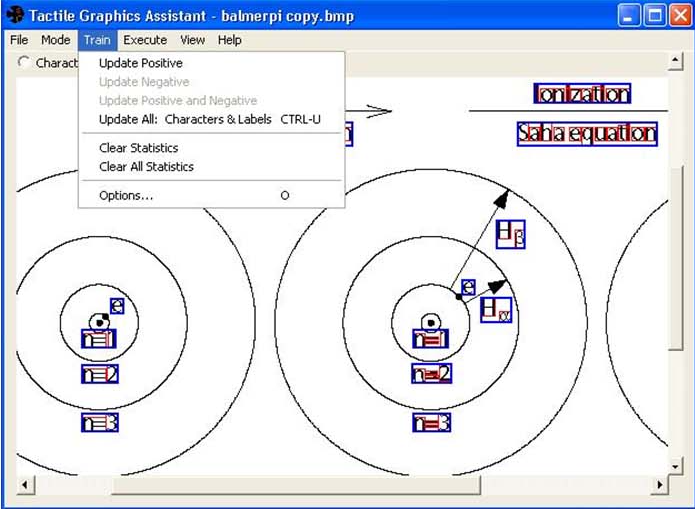

Improving Access To Graphical Images for Blind Students

The Tactile Graphics Assistant (TGA) is a program created at the University of Washington to aid in the tactile image translation process. The TGA separates text from an image so that the text can later be replaced by Braille and inserted back onto the image. In order to streamline the text selection process, the TGA employs machine learning to recognize text so that large groups (possibly hundreds) of images can be translated at a time.

Mobile ASL

MobileASL is a video compression project at the University of Washington and Cornell University with the goal of making wireless cell phone communication through sign language a reality in the U.S.

More about Mobile ASL

With the advent of cell phone PDAs with larger screens and photo/video capture, people who communicate with American Sign Language (ASL) could utilize these new technologies. However, due to the low bandwidth of the U.S. wireless telephone network, even today’s best video encoders likely cannot produce the quality video needed for intelligible ASL. Instead, a new real time video compression scheme is needed to transmit within the existing wireless network while maintaining video quality that allows users to understand semantics of ASL with ease. For this technology to exist in the immediate future, the MobileASL project is designing new ASL encoders that are compatible with the new H.264/AVC compression standard using x264 (nearly doubling compression ratios of MPEG-2). The result will be a video compression metric that takes into account empirically validated visual and perceptual processes that occur during conversations in ASL.

People already use cell phones for sign language communication in countries like Japan and Sweden where 3G (higher bandwidth) networks are ubiquitous. See videos from Sweden.

This material is based upon work supported by the National Science Foundation under Grant Nos. IIS-0514353 and IIS-0811884, Sprint, Nokia, and HTC.

MobileAccessibility

MobileAccessibility is an entirely different approach to providing useful mobile functionality to blind, low-vision, and deaf-blind users. This new approach leverages the sensors that modern cell phones already have to keep devices cheap, and uses remote web services to process requests. Importantly, both fully-automated and human-powered web services are used to balance the cost and capability of the services available.

More about MobileAccessibility

We are developing applications on mobile phones using Google’s Android platform, and on the iPhone. We are designing, implementing, and evaluating our prototypes with blind, low-vision, and deaf-blind participants, through focus groups, interviews, lab studies, and field studies.

Current Projects:

- V-Braille: Haptic Braille Perception using the Touch Screen and Vibration on Mobile Phones

- Talking Barcode Reader

- Color Namer

- Talking Calculator

- Talking Level

- BrailLearn and BrailleBuddies: V-Braille Games for Teaching Children Braille

- FocalEyes Camera Focalization Project: Camera Interaction with Blind Users

- CameraEyes Photo Guide: Enabling Blind Users To Take Framed Photographs of People

- Mobile OCR

- Appliance Reader

- Accessible Tactile Graphics with the Digital Pen

- LocalEyes: Accessible GPS for Exploring Places and Businesses

- ezTasker: Visual and Audio Task Aid for People with Cognitive Disabilities

- Transportation Bus Guide for Deaf-Blind Commuters

Projects are described in more detail in the Projects section.

MobileAccessibility is a joint project between the University of Washington and the University of Rochester.

We would like to acknowledge and thank Google for their help in this project, as well as our blind and deaf-blind research participants.

ASL-STEM

The ASL-STEM Forum is part of a research venture at the University of Washington which seeks to remove a fundamental obstacle currently in the way of deaf scholars, both students and professionals. Due to its relative youth and widely dispersed user base, American Sign Language (ASL) has never developed standardized vocabulary for the many terms that have arisen in advanced Science, Technology, Engineering, and Mathematics (STEM) fields.

More about ASL-STEM

The lack of standard STEM vocabulary in ASL makes it hard for deaf students to learn in their native language, and it makes communication between both deaf and hearing scientists and engineers far more difficult.

As any intro linguistics student could tell you, language use and evolution cannot be dictated by the few, no matter their expertise. This approach has failed time and again throughout history, and, no doubt, this case will be no exception. Instead, languages change because their users choose to change them.

This Forum is an attempt to connect you, all of the ASL users of North America, together so that you can, of your own accord, introduce the necessary vocabulary to your language. This will make it much easier for those in the Deaf community to pursue careers in technical fields.

We have 229 users, 2444 topics, and 1806 signs, with more being added all the time.

The ASL-STEM Forum is part of the Deaf and Hard of Hearing Cyber Community project based out of the University of Washington’s Computer Science and Engineering department. The project is led by UW CSE Professor Richard Ladner, as well as a number of others.

WebAnywhere

WebAnywhere is a non-visual interface to the web that requires no new software to be downloaded or installed.It works right in the browser, which means you can access it from any computer, even locked-down public computer terminals. WebAnywhere enables you to interact with the web in a similar way to how you may have used other screen readers before.

V-Braille

Modern smart phones like the iPhone, Windows phones, and Google Android phones have the ability to output speech and vibrations, which make them suitable as multi-modal devices for blind children to learn Braille as well as other knowledge that will be useful throughout life. V-Braille is an infrastructure for smart phones that employs vibration as the means for reading Braille. Additionally, it uses both vibration and text-to-speech for entering (or “writing”) Braille.