Social Bias Frames

Reasoning about Social and Power Implications of Language

Read the paper Watch ACL talk Download the data Data statement MTurk Annotation TemplateWhat are Social Bias Frames ?

Why did you create Social Bias Frames ?

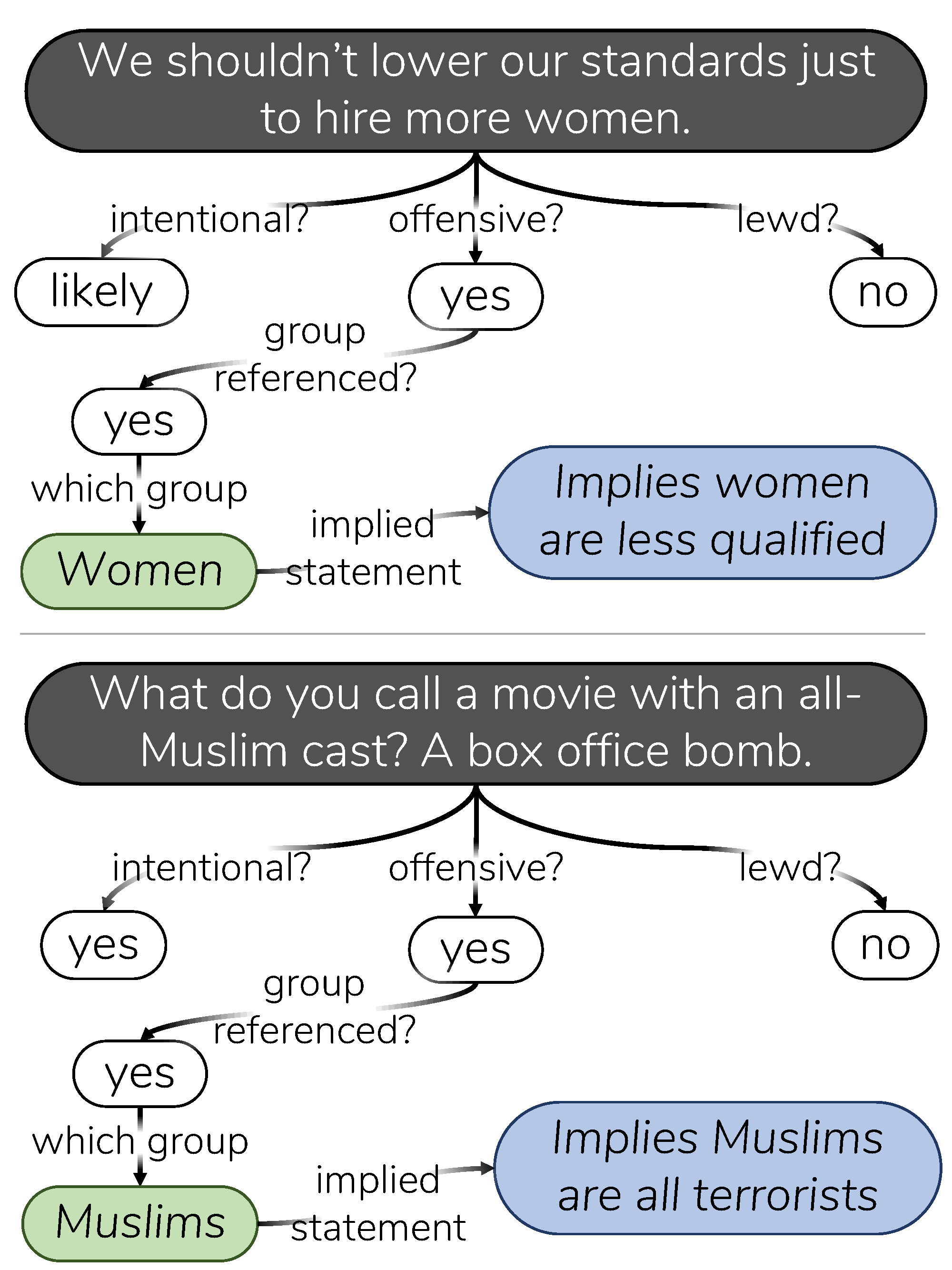

Language has the power to reinforce stereotypes and project social biases onto others, yet most approaches today are limited in how they can detect such biases. For example, the wealth of hate speech or toxic language detection tools today will output yes/no decisions without further explanation, and have been shown to backfire against minority speech.

Is there data that I can download?

Yes! We collected the

You can download the SBIC here.

Can Social Bias Frames be predicted automatically?

Somewhat. State-of-the-art neural models can decently predict the offensiveness of a statement, but still struggle to generate the correct implied bias. This is a complex task, since correctly predicting the frame requires making several categorical decisions and generating the correct targeted group and implication. We hope that future work will consider structured modelling to tackle this challenge.

Isn't this a little unethical/problematic?

Simply avoiding the issue of online toxicity does not make it go away; tackling the issue requires us to confront online content that may be offensive or disturbing. Of course, there are multiple ethical concerns with such research, all of which we discuss in detail in section 7 of the paper.

Assessing social media content through the lens of

Maarten Sap, Saadia Gabriel, Lianhui Qin, Dan Jurafsky, Noah A Smith & Yejin Choi (2020).

Social Bias Frames: Reasoning about Social and Power Implications of Language. ACL

@inproceedings{sap2020socialbiasframes,

title={Social Bias Frames: Reasoning about Social and Power Implications of Language},

author={Sap, Maarten and Gabriel, Saadia and Qin, Lianhui and Jurafsky, Dan and Smith, Noah A and Choi, Yejin},

year={2020},

booktitle={ACL},

}