Selected Projects

Disclaimer: Below are some impactful projects that have come out of my research. Unfortunately, I have not kept this page up to date -- please see my latest publications for my research interests.

Adaptive Mutliple Testing with FDR Control

Consider N possible treatments, say, drugs in a clinical trial, where each treatment either has a positive expected effect or no difference. If evaluating the ith treatment results in a noisy outcome how do we adaptively decide which treatment to try next if the goal is to discover as many true positives subject to the proportion of false discoveries being bounded by .05? Our solution to this problem was so efficient that we worked with Optimizely, the largest experimentation platform for A/B/n testing on the web, to get our algorithm implemented to be exploited by some of the web's most successful companies.

Optimizing Crowdfunding Platforms

Kiva is a nonprofit crowdfunding platform with a mission to help alleviate poverty around the world by enabling anyone in the crowd to lend as little as $25 to the borrower to help them start or grow a business, go to school, access clean energy or realize their potential. Unfortunately, borrowers outnumber lenders and not all projects can hit their reserve price and be funded. The challenge for the crowdfunding platform is deciding how to prioritize loans--what lenders see when they look at the website--to maximize the total number of fully funded projects. This objective is in contrast to maximizing total number of lending events, analogous to click through rate. We model this problem and propose an algorithm for this setting in our conference paper and we are actively working with Kiva to implement it in their system. The same principles directly apply to other crowdfunding systems as well (e.g., Kickstarter, IndieGoGo, etc.).

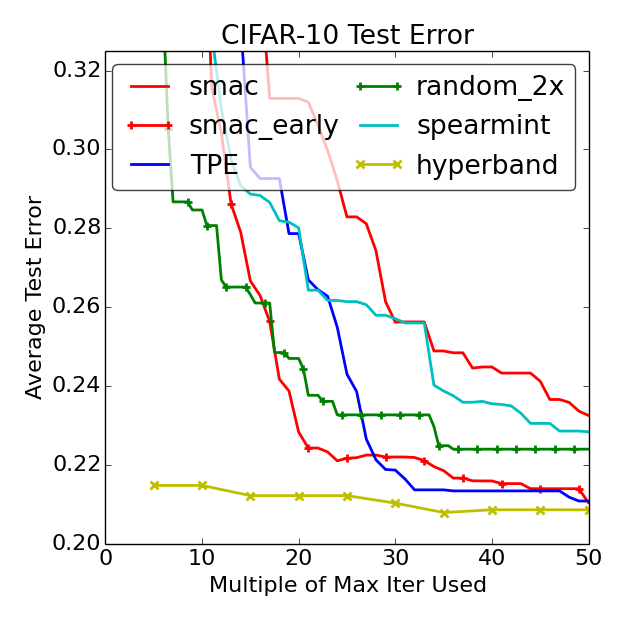

Hyperband: Bandits for hyperparameter tuning

Hyperband is a method for speeding up hyperparameter search. In contrast to Bayesian methods that focus energy on making better selections, Hyperband uses simple random search but exploits the iterative nature of training algorithms using recent advances in pure-exploration multi-armed bandits. Up to orders of magnitude improvements over Bayesian optimization are achievable on deep learning tasks.

The New Yorker Caption Contest

Each week, the New Yorker magazine runs a cartoon contest where readers are invited to submit a caption for that week's cartoon - thousands are submitted. The NEXT team has teamed up with Bob Mankoff, cartoon editor of the New Yorker, to use crowdsourcing and adaptive sampling techniques to help decide the caption contest winner each week. This is an example of state-of-the-art active learning being implemented and evaluated in the real world using the NEXT system and the principles developed in that paper.

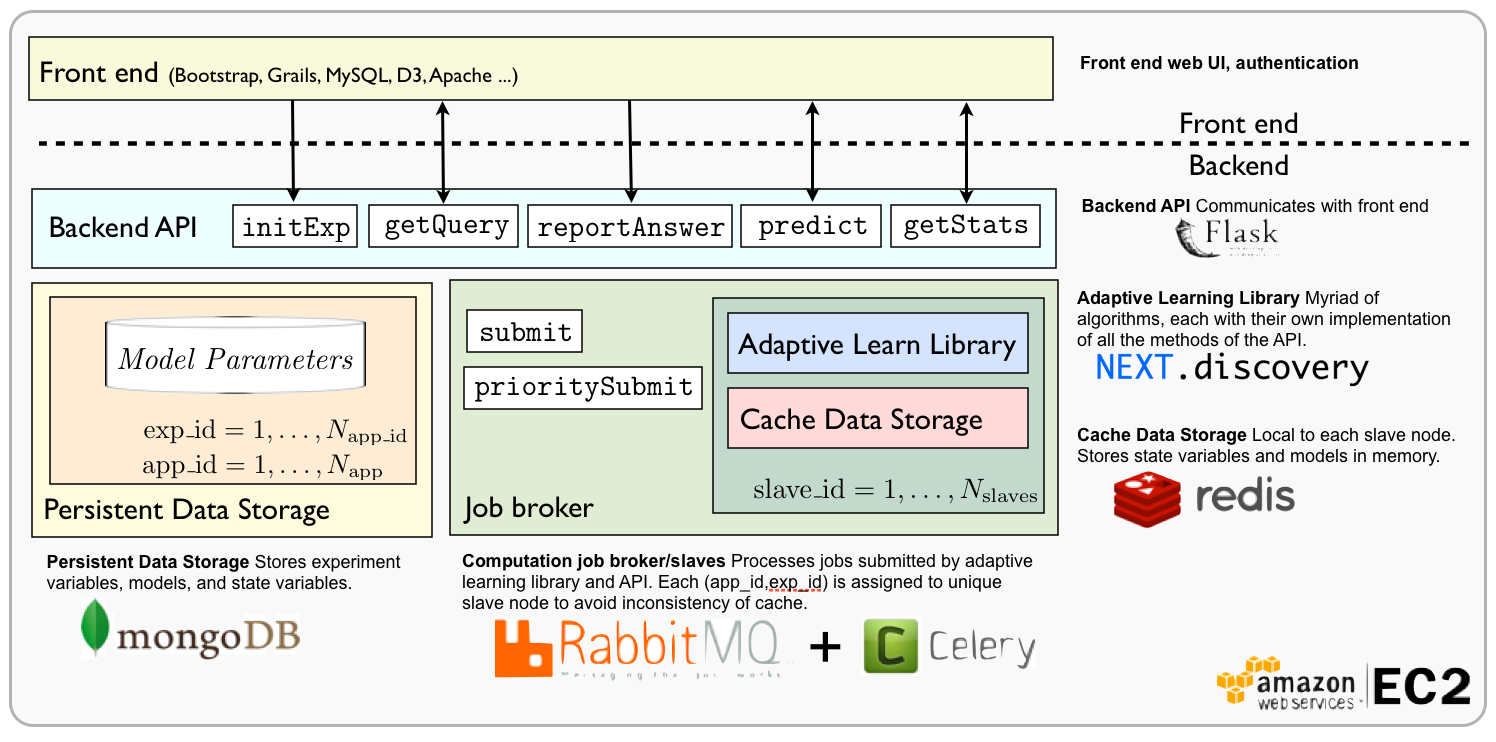

NEXT

NEXT is a computational framework and open-source machine learning system that simplifies the deployment and evaluation of active learning algorithms that use human feedback, e.g. from Mechanical Turk. The system is optimized for the real-time computational demands of active learning algorithms and built to scale to handle a crowd of workers any size. The system is for active learning researchers as well as practitioners who want to collect data adaptively.

Beer Mapper

Beer Mapper began as a practical implementation of my theoretical active ranking work on an iPhone/iPad to be used simply as a proof of concept and a cool prop to use in presentations of the theory. A brief page on my website descibring how it worked collected dust for several months until several blogs found it translating into large traffic and interest in it being brought to the app store. I teamed up with the tech startup Savvo based out of Chicago that is now leading the development of the app.