Battery-free Computer Vision on

Insect-scale Microrobots

V.

Arroyos*

,

M.

Ibrahim*

,

E.

Mensah*

, and

3 more authors

IEEE International Conference on Robotics and Automation, May 2025

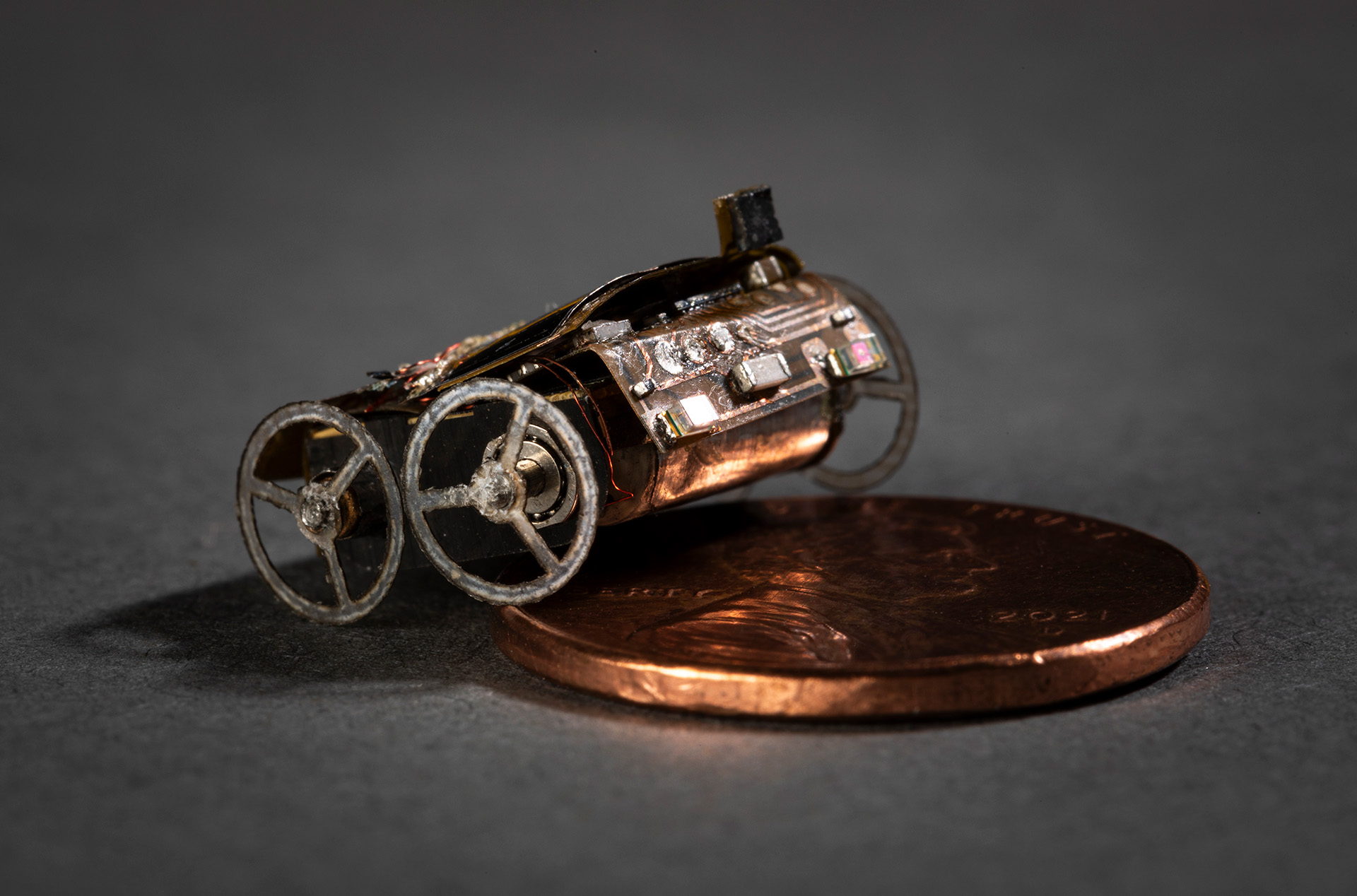

The goal of this project is to enable the use of efficient on-device deep learning models for battery-free mobility-based sensing robots. We target the deployment of these models on MilliMobile, a one square centimeter microrobotic platform with only 512 KB of RAM and 1 MB of flash memory (millimobile.cs.washington.edu), making it challenging to run traditional models onboard. We address this challenge by integrating intermittent computing and motion with image-based sensing, ensuring that the system only performs high-power actions–like locomotion, inference, and communication–when specific conditions are satisfied. Event-based vision and intermittent computing approaches will also be leveraged to optimize the MCUs time in ultra-low-power modes. We train on insect images from Insect Detect - insect classification dataset v2 and achieve almost 70% accuracy after 500 epochs with milliWatts of power consumed when running the system on the nRF52840 microcontroller.

Battery-free Computer Vision on Insect-scale MicrorobotsIEEE International Conference on Robotics and Automation, May 2025

Battery-free Computer Vision on Insect-scale MicrorobotsIEEE International Conference on Robotics and Automation, May 2025