Luis Ceze

Professor

Paul G. Allen School of Computer Science and Engineering

University of Washington

Paul G. Allen Center, Room 576

I help run three research groups: Sampa on hardware/software systems, SAMPL on machine learning systems and architecture, and MISL on using DNA for information technology applications.

I also have the immense priviledge of leading the team at OctoML, where I am co-founder and CEO.

My official UW CSE webpage, and my perpetually semi-up-to-date CV.

An overview of our work on approximate computing can be found here. And try out our language, compiler and benchmarking infrastructure for approximate computing.

Check out the videos from my Hardware/Software Interface class

Take a look at TVM, our recently-released end-to-end stack for deep learning - tvm.ai.

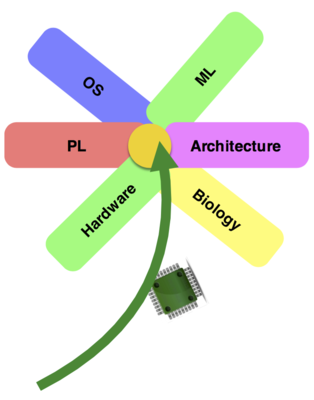

I work on the intersection of computer architecture, programming languages, machine learning and biology. My goals are explore new and better ways to build computing systems.

Selected, recent-ish, publications(Google Scholar Profile, full curated list):

"DietCode: Automatic Optimization for Dynamic Tensor Programs", MLSys'22 (to appear).

"SRIFTY: Swift and Thrifty Distributed Neural Network Training on the Cloud", MLSys'22 (to appear).

"Synthetic DNA applications in information technology", Nature Communications 2022.

"Multiplexed direct detection of barcoded protein reporters on a nanopore array", Nature Biotechnology 2022.

"Scaling DNA data storage with nanoscale electrode wells", Science Advances 2021.

"Molecular-level similarity search brings computing to DNA data storage", Nature Communications 2021.

"Reticle: a virtual machine for programming modern FPGAs", PLDI'21.

"Rapid and robust assembly and decoding of molecular tags with DNA-based nanopore signatures", Nature Communications 2020.

"Quantifying molecular bias in DNA data storage", Nature Communications 2020.

"LastLayer: Toward hardware and software continuous integration", IEEE Micro 2020.

"Riptide: Fast End-to-End Binarized Neural Networks", MLsys'20.

"PLink: Discovering and Exploiting Locality for Accelerated Distributed Training on the public Cloud", MLsys'20.

"Probing the physical limits of reliable DNA data retrieval", Nature Communications'20.

"Automatic Generation of High-Performance Quantized Machine Learning Kernels", CGO'20.

"A Hardware–Software Blueprint for Flexible Deep Learning Specialization", IEEE Micro Magazine, 2019.

"DNA assembly for nanopore data storage readout", Nature Communications,19.

"Molecular digital data storage using DNA", Nature Genetics Reviews'19.

"High density DNA data storage library via dehydration with digital microfluidic retrieval", Nature Communications'19.

"DNA Data Storage and Hybrid Molecular-Electronic Computing", Proceedings of IEEE'19.

"Vignette: Perceptual Compression for Video Storage and Processing Systems.", SoCC'19.

"Puddle: A Dynamic, Error-Correcting, Full-Stack Microfluidics Platform", ASPLOS'19.

"Learning to Optimize Tensor Programs", NeurIPS'18.

"TVM:An Automated End-to-End Optimizing Compiler for Deep Learning", OSDI'18.

"Parameter Hub: a Rack-Scale Parameter Server for Distributed Deep Neural Network Training", SoCC'18.

"Architecture Considerations for Stochastic Computing Accelerators", CODES'18.

"LightDB: A DBMS for Virtual Reality Video", PVLDB'18.

"Troubleshooting Transiently-Recurring Errors in Production Systems with Blame-Proportional Logging", USENIX ATC'18.

"Random access in large-scale DNA data storage", Nature Biotechnology, Cover Feature in Mar'18.

"Application Codesign of Near-Data Processing for Similarity Search", IPDPS'18

"MATIC: Learning Around Erros for Efficient Low-Voltage Neural Network Accelerators", DATE'18, Best Paper Award.

"Clustering Billions of Reads for DNA Data Storage", NIPS'17

"Computer Security, Privacy, and DNA Sequencing: Compromising Computers with Synthesized DNA, Privacy Leaks, and More.", Usenix Security'17

"Exploring Computation-Communication Tradeoffs in Camera Systems", IISWC'17

"Customizing Progressive JPEG for Efficient Image Storage", USENIX HotStorage'17

"A Hardware-Friendly Bilateral Solver for Real-Time Virtual Reality Video", HPG'17

"VisualCloud Demonstration: A DBMS for Virtual Reality, SIGMOD'17

"WeLight:Augmenting Interpersonal Communication through Connected Lighting, CHI-LBW'17 (try it out!)

"Approximate Storage for Encrypted and Compressed Videos, ASPLOS'17

"Enabling In-network Computation with a Programmable Network Middlebox, ASPLOS'17

"Energy-Efficient Hybrid Stochastic-Binary Neural Networks for Near-Sensor Computing, DATE'17.

"Disciplined Inconsistency with Consistency Types, SOCC'16.

"A DNA-Based Archival Storage System, ASPLOS'16.

"High-Density Image Storage Using Approximate Memory Cells, ASPLOS'16.

"Optimizing Synthesis with Metasketches”, POPL'16.

"Probability Type Inference for Flexible Approximate Programming”, OOPSLA'15.

"Hardware–Software Co-Design: Not Just a Cliche”, SNAPL'15.

"Latency-Tolerant Software Distributed Shared Memory", USENIX ATC'15.

"Debugging and Monitoring Quality in Approximate Programs", ASPLOS 2015.

"SNNAP: Neural Acceleration on Programmable Logic", HPCA 2015.

"Symbolic Execution of Multithreaded Programs from Arbitrary Program Contexts", OOPSLA 2014.

"General-Purpose Code Acceleration with Limited-Precision Analog Computation", ISCA 2014.

"Expressing and Verifying Probabilistic Assertions", PLDI 2014.

"Low-Level Detection of High-Level Data Races with LARD", ASPLOS 2014.

"Approximate Storage in Solid-State Memories", MICRO 2013.

"EnerJ, the Language of Good-Enough Computing", IEEE Spectrum Feature Article.

"Input-Covering Schedules for Multithreaded Programs", OOPSLA 2013.

"DNA-based Molecular Architecture with Spatially Localized Components", ISCA 2013.

"Cooperative Empirical Failure Avoidance for Multithreaded Programs", ASPLOS 2013.

"Neural Acceleration for General-Purpose Approximate Programs", MICRO 2012 (Selected as IEEE Micro Top Picks and CACM Research Highlights).

"EnerJ: Approximate Data Types for Safe and General Low-Power Computation", PLDI 2011.

"Deterministic Process Groups in dOS", OSDI 2010.

"DMP: Deterministic Shared Memory Multiprocessing", ASPLOS 2009. (Selected for the IEEE Micro Top Picks 2009).

We have released: Grappa runtime system for large-scale irregular applications (e.g., graph analytics), approxbench.org, and ACCEPT, a set of tools and benchmarks for approximate computing research, and TVM/VTA, an end-to-end HW/SW system for deep learning acceleration.

Take a look at the collectively written white-paper on 21st Century computer architecture research, and a vision for the next 15 years of architecture research.

I have the pleasure of working with the following awesome post-docs:

Peter Ney (w/ Yoshi Kohno) - cyber-bio securityI have the pleasure of working with the following incredible graduate students:

Zihao Ye - ML systemsI was born in São Paulo, Brazil.

I received my PhD in Computer Science from University of Illinois at Urbana-Champaign. I got my BEng and MEng in Electrical Engineering from University of São Paulo, Brazil.

I am very fortunate to have such a happy family.

My (much smarter than me) brother was freezing in Michigan but having fun with aerospace engineering, now he lives just a few miles away!

I am always happy because she

exists.